Is Restricted Software Environment a spherical cow?

- Of Physics and Poetry

In all fields of science, simplifying the real world in order to successfully develop various theories for the imaginary world is quite normal. Physicists have a full set of artifacts: a perfect gas, a point mass, a perfectly rigid body, an ideal fluid, etc.

And it works! Perfect gas law describes real gases quite well, and classical mechanics successfully deals with motion calculus for bodies of different size (as long as we stay out of quantum world or vice versa as long as body masses don’t fall under general relativity theory).

A smart way to call such process is model reduction. In other words, we simplify a real system to the max, then develop a mathematical model that is capable of predicting system behavior and then — boom! — it just so happens that the real system complies with the discovered regularities.

Similar method is also applied in information security. Today we will review one of such artifacts — a restricted software environment and how this environment helps solving real problems of establishing required information security level in real systems.

2. How Security Modeling Turned Into Science

But first things first: let’s talk about historical background. In the 1970s, a really important event for information security sphere occurred. The United States Department of Defense bought a computer. Something like this:

Honeywell-6080 mainframe. A girl on the photo is either an eye stopper or to help understand the scale…

Since it was many moons ago when trees were small and computers were huge, the Department of Defense had enough money (or, maybe, space) just for one computer. Naturally, they planned to process some secret data with its aid. However, at that moment the predecessor of Internet — ARPANET — had already existed and the Department of Defense apparently did not want to limit themselves to working with secret data but also felt like researching some funny cat pictures…

Consequently, a wild challenge appeared: how could one make processing classified and non-classified data on the same mainframe possible? Moreover, a multi-user environment was required and intended end-users were to be of two types: department officers and civilians from ARPANET (and it is well known by militaries that civilians cannot be trusted at all).

That was how Project №522B started. It was a research and development project intended for… Well, judging by the results, its main goal was to create an academic discipline named “Theoretical Foundations of Computer Security”, describing almost every security approach used in modern software.

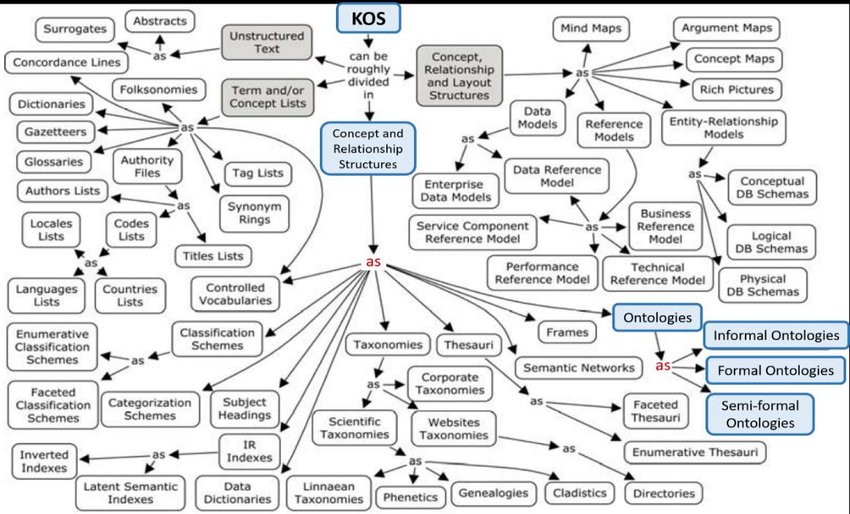

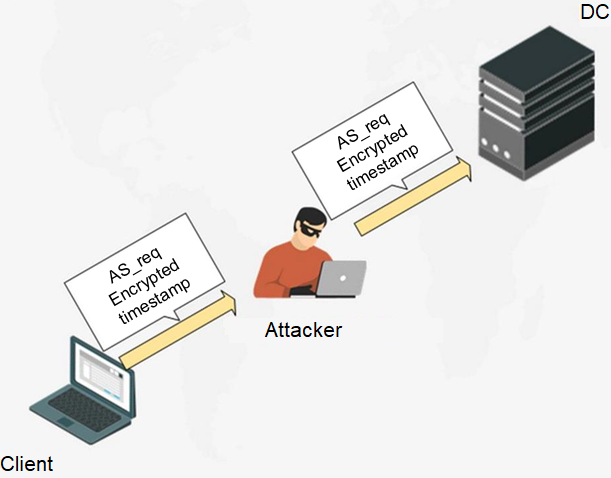

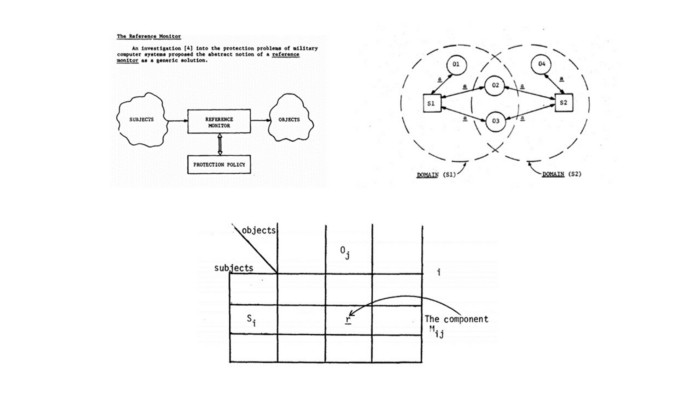

Screenshots of Project 522B original reports: reference monitor, security domains and access matrix. These are just a small part of theoretical conceptions developed within the framework of this project.

A separate article could be written about the results of Project 522B research as well as about its participants who became legends in the world of information security. However, at this time our interest is limited to a specific topic which is a subject-object integrity model.

3. Subject-Object Integrity Model in Plain English

So, we have made a decision, that in order to develop a theoretical system model that can be useful for solving the security problem, we need to simplify the initial system somehow.

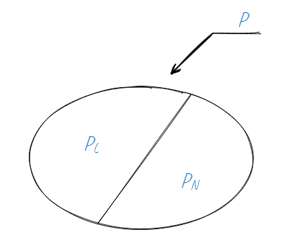

The simplification has been easily found. Let’s consider the whole system as a collection of subjects (i.e. active entities e.g. processes) and objects (i.e. passive entities e.g. data files). Subjects will somehow interact with objects e.g. carry out access (or create information flow). We will divide all access options (a P set) into authorized access (PL) and unauthorized access (PN).

Yet, this model is oversimplified… How about bringing it a little bit closer to reality?

- Subjects can appear and disappear as different processes can be started and stopped in computer systems. At the same time, a subject can not appear out of a clear blue sky: in real systems a subject (an executable file, a script etc.) is created out of some data previously contained in the system i.e. there’ll always be an object at the beginning.

- Objects can affect subject behavior, for example, if the object is an application configuration file.

- Objects can be changed (subjects gain access to objects for some reason, let’s say they change something).

- A special subject (let’s name it a reference monitor) has to monitor compliance with access control policy (i.e. ensure that each access belongs to PL).

- A security monitor also has related objects (that contain PL description), that are affecting its operation.

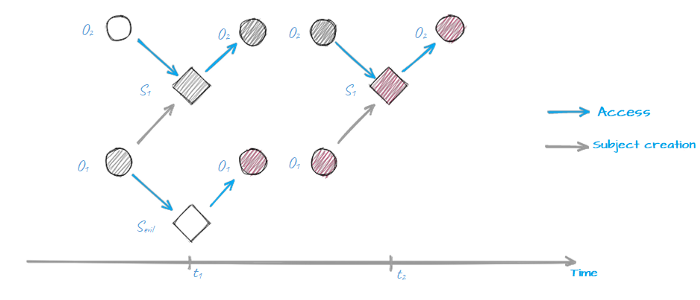

And to make it harder, let’s mention that access operations when subject S accesses object O at t1 and t2 points in time are, in fact, two different access operations because in the timespan between t1 and t2 both subject S and object O might have changed. Consequently this makes describing P set quite challenging because it contains an infinite number of elements!

Subject S1 gains access to object O2 at t1 and t2, but now they are totally different object and subject…

So, how can we ensure that only access operations that belong to PL are permitted in this chaos if we can’t even describe PL itself?

For starters, let’s take a closer look at objects affecting subject’s behavior (i.e. executable files, configuration files etc.). Let’s say we have 2 subjects and we know all objects affecting these subjects’ behavior. Such objects are called associated with the subject.

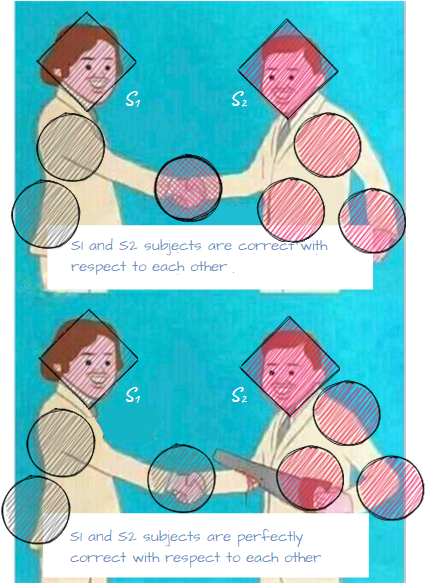

If we can ensure that each subject can not gain access (or create an information flow) to objects associated with its neighbor, then we can call such subjects correct with respect to each other. If sets of objects associated to each of these subjects have no intersection then we can call them perfectly correct with respect to each other.

Using this definition, we can develop a criteria for a guaranteed implementation of the access policy in the system: if at the initial point in time all subjects are perfectly correct with respect to each other and they can perform access operations (generate flows) that belong to PL only, then over time they will be able to perform access operations from PL only. Such set of subjects is called a perfectly restricted set of subjects.

So, here is what looks like a perfect solution for the task! Only it’s not. In fact, this means that, for example, each user in a multi-user system works on his or her own isolated computer that can’t interact with a neighbor’s computer. What a splendid multi-user system that allows information exchange via users only…

I won’t make you suffer through mathematical subtleties so let’s cut the chase and go straight to the solution that will allow the implementation of the restricted software environment in real life rather than in vivid imagination of a security theorist.

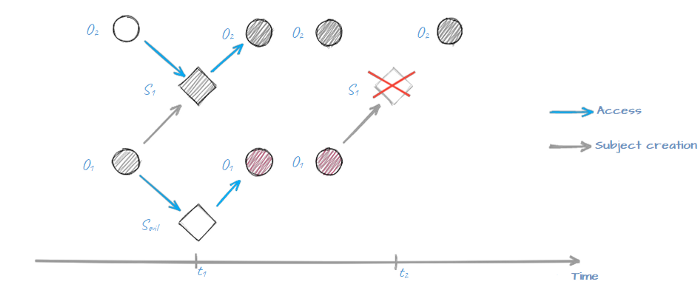

Let’s add another security capability to our model and assume that creation of a new subject S out of object O is possible only if object O has not been changed since the initial moment in time (it’s called “creation of a subject with integrity control”). This small change can make a big difference:

The sequence was broken because object O1 has changed at t1 making the creation of the modified subject S1 impossible

The most important change is that we have guaranteed a finite amount of subject variations in the system regardless of its operation time. After all, we have a limited set of objects that can be used for subject creation.

This difference helps us to come up with a sensible access control algorithm.

We can describe the PL set for all subjects and all objects in such a way as to ensure correctness with respect to each other for all subjects (it is important to note that we are not talking about perfect correctness, therefore multiple subjects can be created from a single object). This set is finite because the amount of objects is finite at the initial point in time, and so is the set of created subjects. And we can be sure that as time passes nothing will change: there will be no new subjects that would be able to get around the limits of the security monitor and rewrite our policy because creation is performed with integrity control.

All we have left to do is make sure that this approach can actually be transferred from mathematical description to real system capabilities while keeping the obtained system security property. Let’s get it done.

4. Restricted Software Environment in Real Life

First, we’ll try to solve the problem described by the US Department of Defense (although we’d be half a century late). In order to make multiple users able to work with a single mainframe securely, we need:

- An operating system component which will control the integrity of the software executable file prior to running it. If the integrity check fails — the software would not be permitted to run.

- An access control policy (e.g. in a form of an access control matrix) which will outline which software would have access to which files (most importantly write access, as our main problem is protecting the system operation algorithm from any modifications that would allow system security policy violation). Our primary concern is software executable files (and operating system kernel components) as well as various data files that affect software operation algorithm.

Everything works as long a we have a single computer. Things get complicated when we consider a modern system that consists of multiple components connected to each other via a local network. Sure, we can always go hardcore and configure both local and network access control by using IP mechanisms such as CIPSO option (by the way, this is another interesting topic which I could cover if you’re interested), however, it’s technically impossible for a heterogeneous network.

Therefore, we will set a few technical restrictions on a real system and see whether they coincide with theory in terms of restricted software environment:

- We can control subjects’ integrity. Though not every creation can be stopped even if integrity check fails (how can we stop a network switch from loading its software even if we discover that its startup configuration has been modified?).

- It’s not always possible to control separate processes’ access to objects. That same network switch has a firmware that contains multiple processes accessing various objects (files, separate records, device-specific data, such as CAM-tables, etc). However, this switch has no standard mechanism for setting any access control matrix for these subjects and objects.

- We can’t control subjects’ access to objects located within other network nodes. In fact, it might be theoretically possible. Carry all interaction through a firewall, do a thorough traffic inspection, apply a strict interaction control policy similar to access control matrix… But in the real world things won’t work this way. This firewall will require tremendous computing resources and it’s admin would have to be extremely patient in order to configure this setup.

And so, how can we solve the problem, considering all these limits?

First of all, integrity control should not be omitted: executable files, configuration files (or more complicated objects such as databases, registry keys or LDAP catalog objects) can be controlled both locally and remotely via the network.

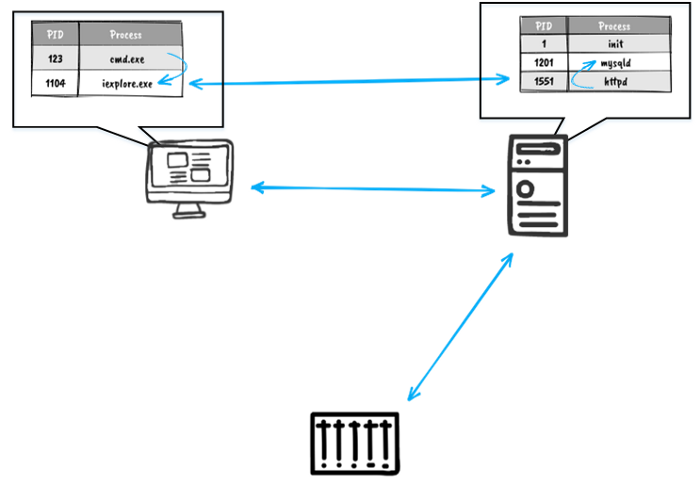

Second, we should divide all interactions into two classes: when subjects and objects are within the node, and when subjects and objects are network nodes themselves. Network node “integrity control” would encompass the permanence of the nodes’ list and their network properties (address, name, open ports, etc).

Third, we can replace the access control matrix for subjects and objects with network flows detection and monitoring (in this case they represent the data flows between subjects and objects). Assuming that at the initial instance (which could also be a continuant interval) all accesses (flows) in the system belong to PL set, we can set them as legitimate and consider any detected flow that does not belong to the set, formed at the initial instance, a violation. However, we should always bear in mind that this assumption is valid only for the systems operating under a single algorithm (or a set of very similar algorithms). For this reason, restricted software environment model is good for all kinds of cyber-physical systems but is hardly applicable for a typical “office” network that sees a lot of changes every minute.

Two-level subject-object model. The first level deals with information flows between network nodes, the second level deals with processes interactions inside each network node.

Let’s summarize what we’ve discussed. Implementation of restricted software environment is a good way to ensure security of different cyber-physical systems (where integrity is one of the most important properties of information).

Establishing restricted software environment for this type of systems can be performed by correct security mechanisms settings applied to each network node as well as via deploying a dedicated device capable of the following:

- Maintaining a database of network objects and their network parameters.

- Monitoring objects’ modifications (i.e. configurations, executable files etc.). In particular, reference monitor configuration on each device should be monitored.

- Control information flows between the nodes and generate alarms upon detecting an unknown flow (since the probability of this flow belonging to PN set is quite high).

And so, we have successfully obtained a list of main capabilities of the ICS Asset Management solutions class. Coincidence? I don’t think so…

Capabilities of ICS Asset Management solutions according to Dale Peterson

A lot of solutions that belong to ICS Asset Management and Detection class are available on the market today but their basic capabilities are often very similar. And now you know why. The technology described in this article has practical application in the CL DATAPK software, CyberLympha’s flagship product, focused on securing enterprise Industrial Control Systems and OT infrastructures. More info about CyberLympha and its products is available on the company website.